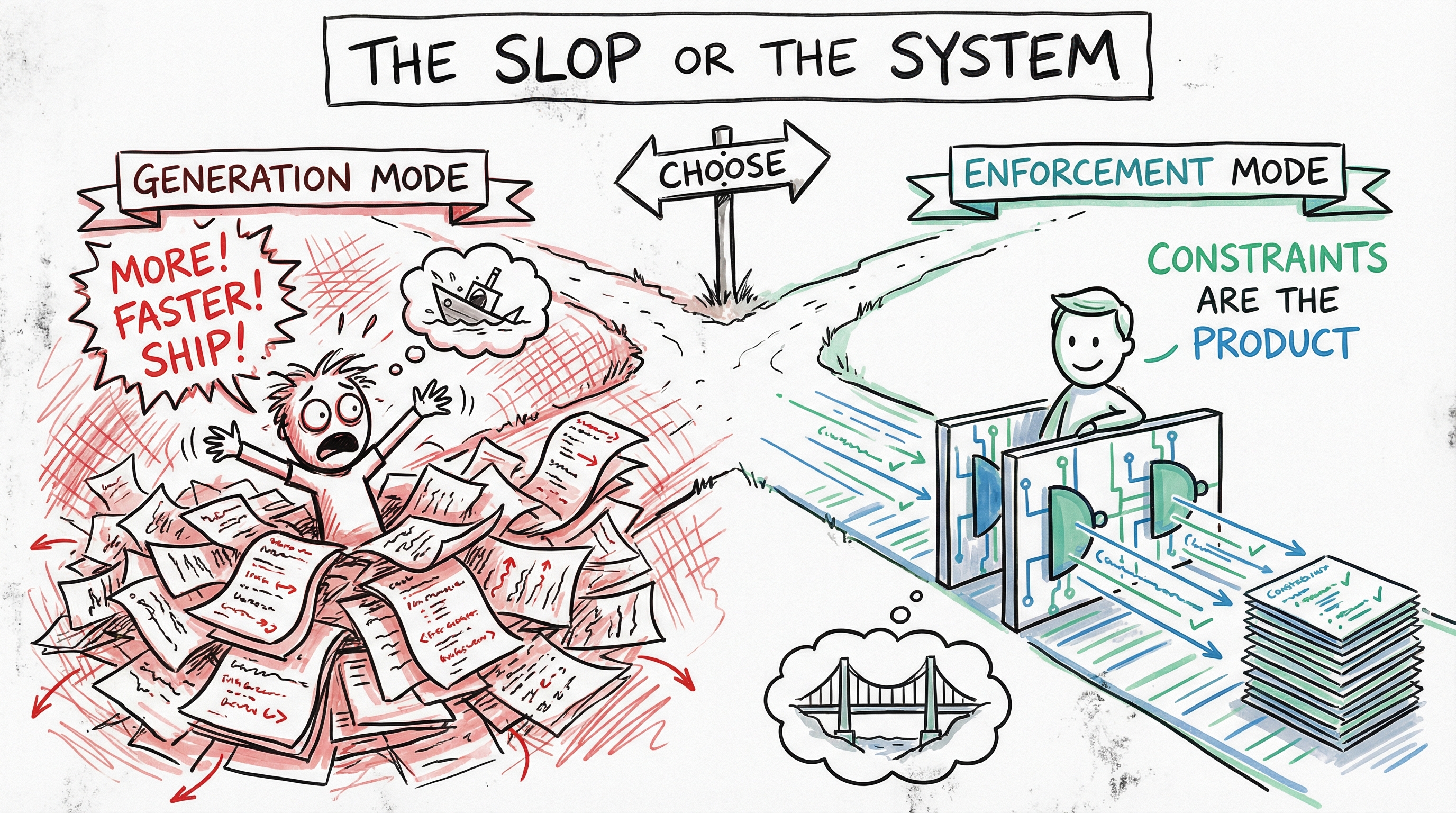

If you don't engineer backpressure, you'll get slopped

"If you don't engineer backpressure, you'll get slopped"

The term "slop" has become the defining word for our AI moment. It describes the flood of low-quality, AI-generated content on the internet: blog posts that say nothing, code that almost works, images with seven fingers.

Geoffrey Huntley's observation cuts to the core of the problem. He's one of the few who sees what's actually happening. While most developers celebrate that they've "learned to vibe code," they're missing the deeper question entirely.

In distributed systems, backpressure prevents upstream producers from overwhelming downstream consumers. Without it, queues overflow, systems crash, and quality disappears.

The same principle applies to AI. But here's what almost nobody talks about: this is entirely a mindset problem.

The mindset gap

Most developers now know how to prompt. They can get Claude to write functions, generate tests, scaffold projects. The tooling works. That's table stakes.

But knowing how to generate output is not the same as knowing how to enforce quality. The gap between "I can make AI write code" and "I can build reliable systems with AI" is vast. And it's almost entirely mental.

The people winning in this new world aren't the best prompters. They're the ones who've internalized a different question: How do I constrain this?

The old world was about capability. Can I build this? The new world is about enforcement. Can I guarantee this meets my bar?

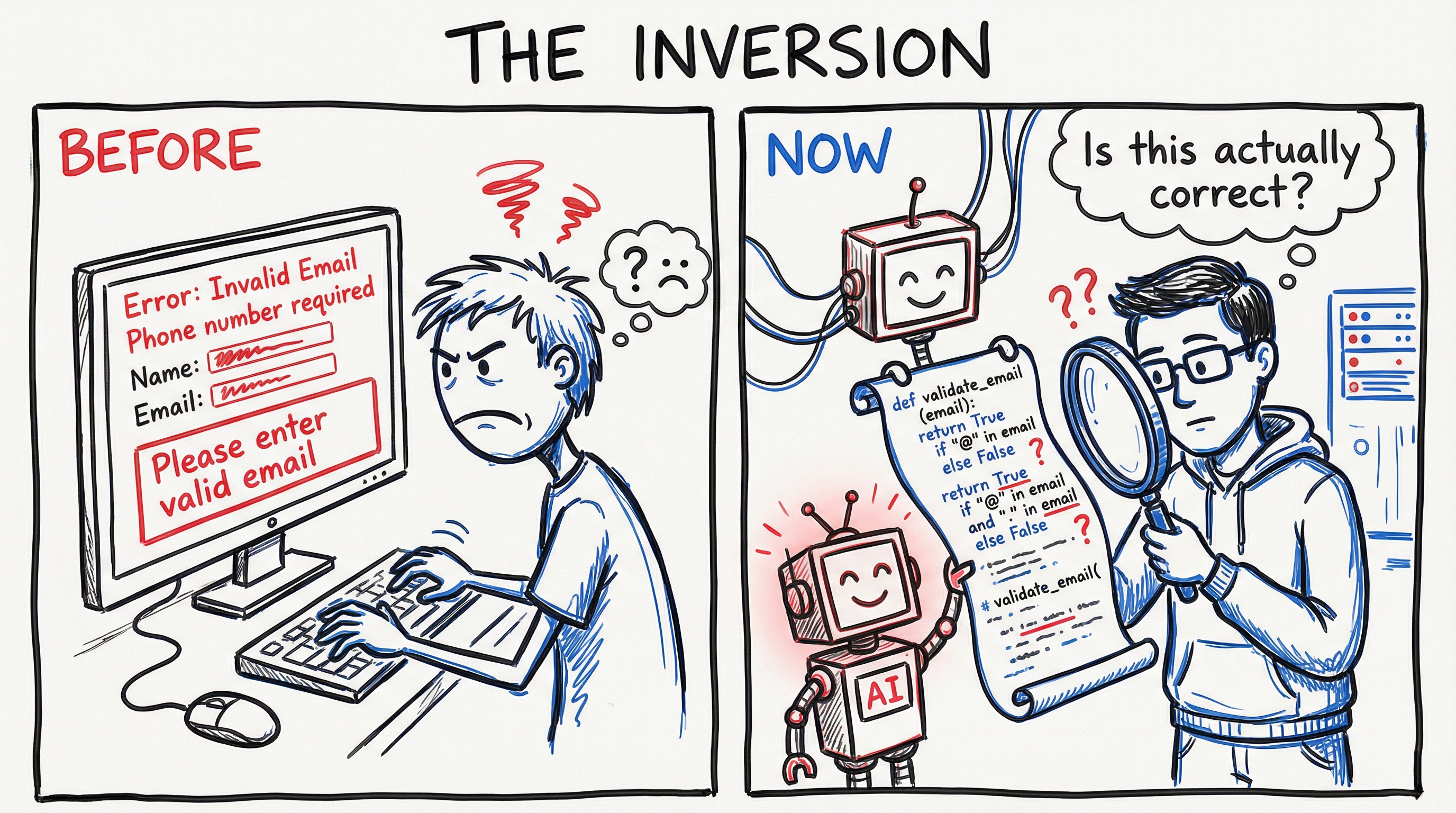

The inversion

Think about how software used to work.

Programs enforced constraints on humans. Form validation. Required fields. Input masks. The computer made sure the user did things correctly.

Now the roles have flipped.

You are the verification layer. The AI is the producer. Your job is to enforce constraints on its output, just like software used to enforce constraints on yours.

This inversion changes everything about how you think. You're not collaborating with a tool. You're validating an untrusted producer. Every output is suspect until proven otherwise.

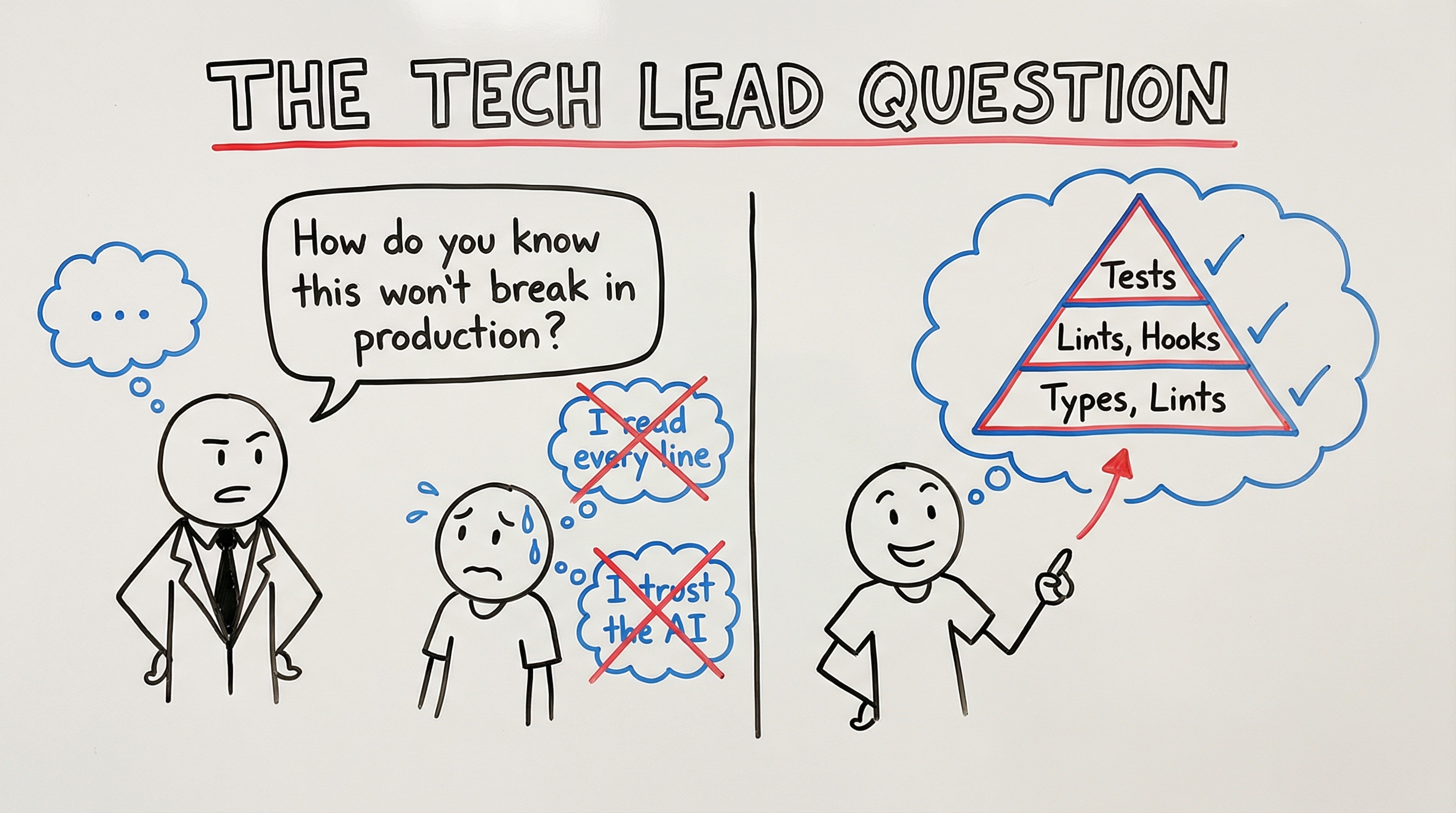

Think like a tech lead

Imagine your boss asks: "How do you know this won't break in production?"

You can't say "I read every line." That's not possible anymore. The output volume is too high.

You can't say "I trust the AI." That's not an answer.

So what do you say?

You describe your system of guarantees. The layers of enforcement that make failure unlikely. The constraints that shape every piece of code before it ever reaches production.

This is the new job. Not writing code. Designing the system that makes AI-generated code trustworthy.

Zero tolerance for smell

Here's what this means in practice: you don't tolerate code smell. Ever.

If something feels off, it is off. If a pattern looks suspicious, investigate. If you're not certain about a piece of code, you don't ship it.

The old mindset was "it works, ship it." The new mindset is "I'm certain this is correct, ship it."

This sounds slower. It's not. Because the certainty comes from your verification layers, not from manual review. You're not reading every line. You're building systems that give you confidence without reading every line.

But you have to actually build those systems. And you have to trust them only as much as they deserve to be trusted. If your tests are weak, your confidence should be low. If your types have holes, your guarantees are hollow.

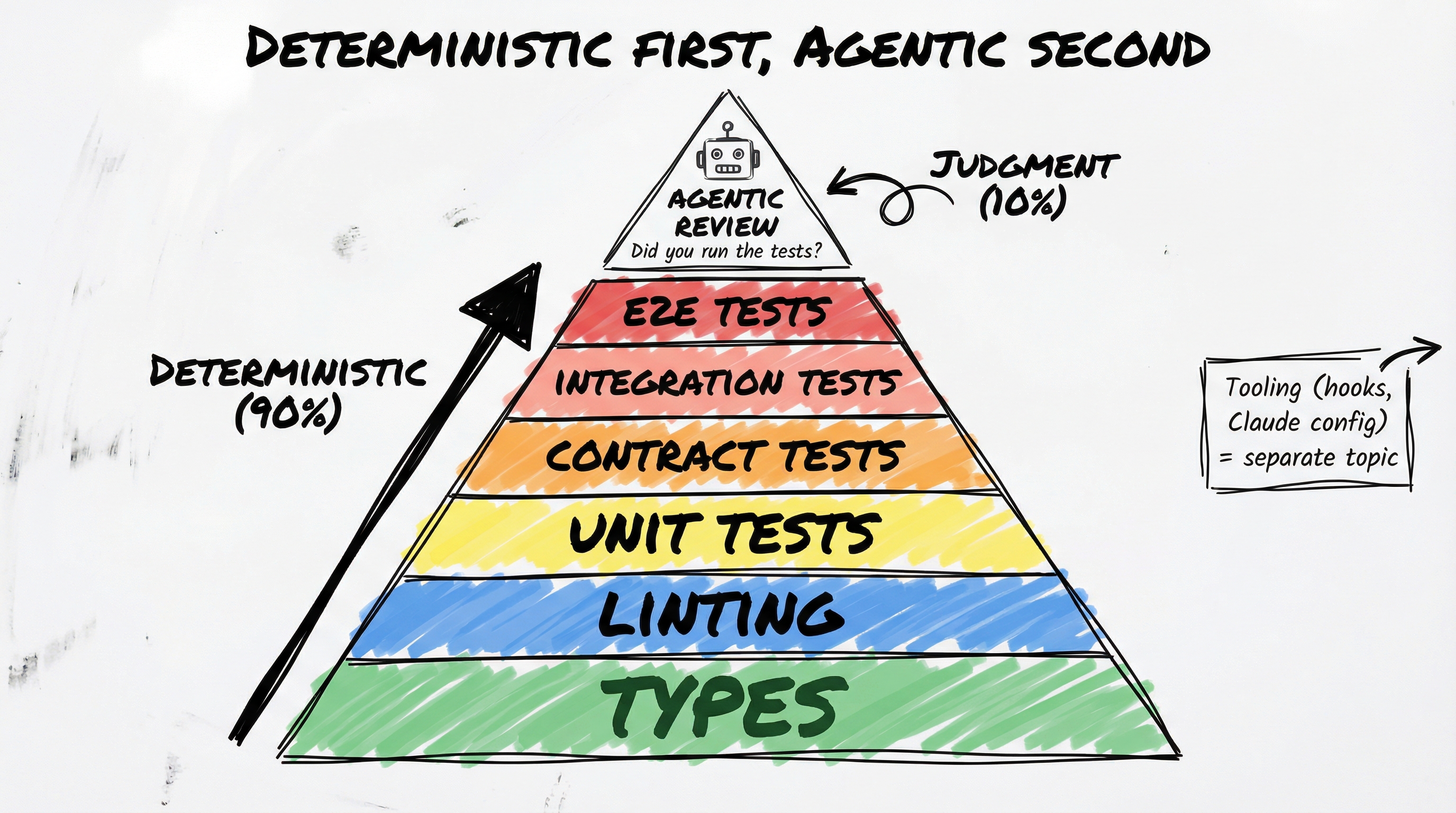

Deterministic first, agentic second

The most important principle: deterministic constraints come first.

Before any AI touches your code review, before any agentic verification runs, you want hard guarantees. Things that will catch errors the same way every time. No judgment calls. No "probably fine."

The hierarchy:

Types. Strictest possible. No any. No escape hatches. Every type flows from a single source of truth. When types are strict, the AI walks a narrow corridor. Deviation becomes structurally impossible.

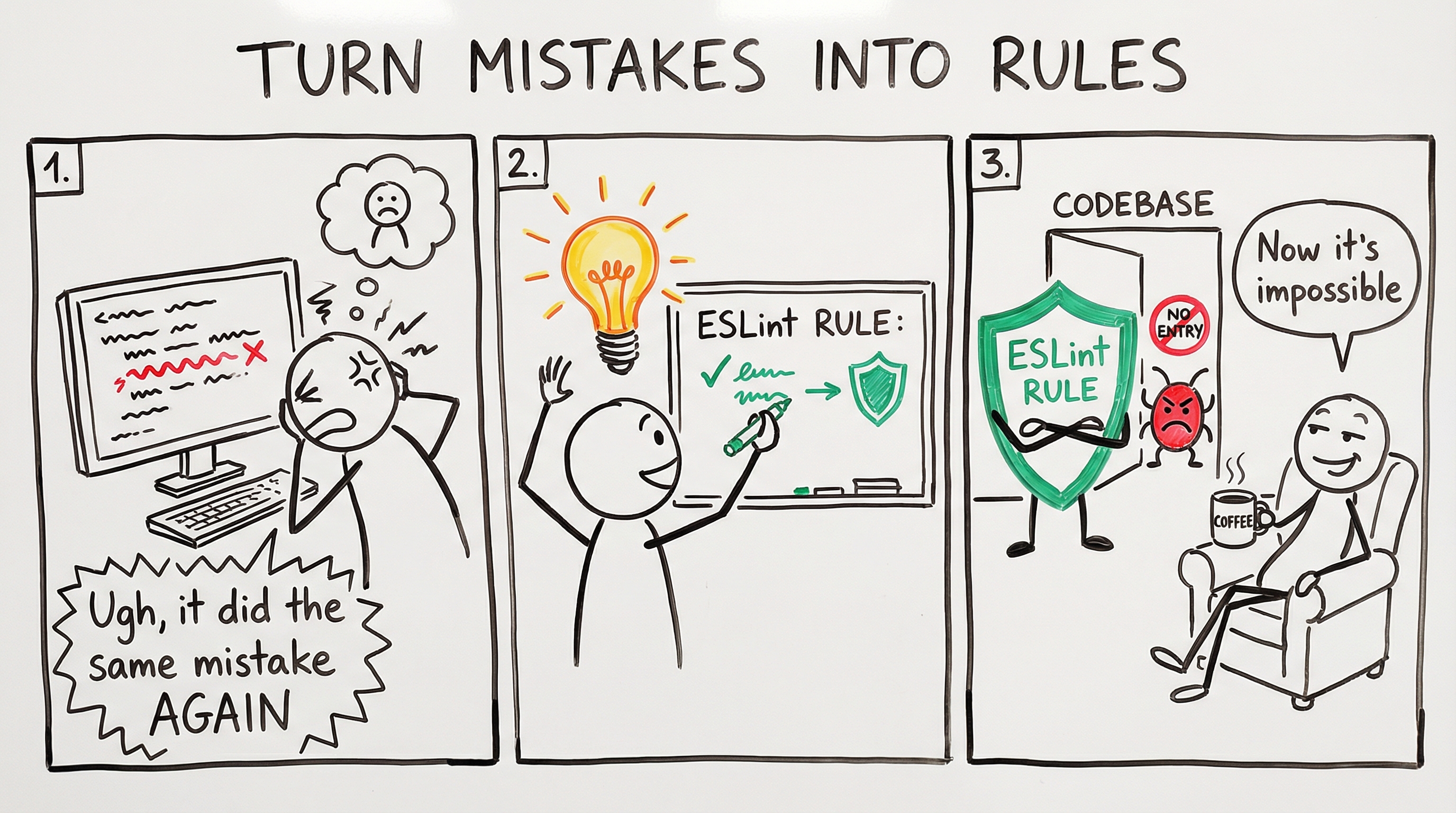

Linting. As hard as you can push it. Think about every rule you want to enforce. What patterns should never appear? What conventions must always hold? Encode them. Make violations fail the build.

Custom ESLint rules are where this gets powerful. Here are examples from my codebase - not to copy, but to show what's possible:

no-plain-error-throw Use TransientError, FatalError, or UnexpectedError instead of plain Error. This enables proper retry logic and error classification.

no-silent-catch Empty catch block swallows errors silently. Either rethrow or handle the error properly.

no-schema-parse Avoid schema.parse() - it throws uncontrolled errors. Use safeParse() for input validation or unsafeParse() for database reads.

prefer-server-actions Prefer server actions over fetch() to internal API routes for end-to-end type safety.

These are just examples. The point isn't to copy my rules. The point is: what mistakes does AI keep making in your codebase? What patterns do you keep correcting? Those are your rules. Write them. Every rule you encode is a class of errors that becomes impossible.

Unit tests. Fast feedback on isolated logic. Does this function do what it claims?

Contract tests. These create fixtures. They verify your assumptions about external systems. When the real API changes, contract tests break first.

Integration tests. Components working together. The fixtures from contract tests feed into integration tests.

E2E tests. The final gate before production. Real browser automation. Real user flows.

Each layer is deterministic. Given the same input, you get the same result. Pass or fail. No ambiguity.

The key: the AI must be able to run these layers on itself. This is non-negotiable. If the AI generates code but can't run pnpm type-check and pnpm lint and pnpm test, you've built a system where you're still the bottleneck. The AI generates, runs the checks, sees the failures, fixes them, runs again. You only see the output after it passes. That's the loop.

(Tooling - hooks, Claude config, stop conditions - is its own topic. The point here is the verification hierarchy itself.)

Agentic backpressure

Some things you can't check deterministically.

Does this code actually solve the problem? Is the architecture sound? Does this change make sense in context? Did you actually run the relevant tests? These require judgment.

This is where agentic backpressure comes in. AI verifying AI's work. "Have you verified this with all relevant tests?" as a stop condition. But only after the deterministic layers have done their work.

Agentic verification is expensive and unreliable. It's a judgment call, which means it can be wrong. You want to minimize how much you rely on it.

Deterministic constraints catch 90% of problems instantly. Agentic review handles the remaining 10% that require context and reasoning.

If you flip this ratio, if you rely on agentic review for things that could be checked deterministically, you're building on sand.

Context engineering, not context dumping

My CLAUDE.md is not several hundred lines. That would be bad context engineering.

The goal isn't to dump every rule you can think of into the AI's context. The goal is to encode constraints where they belong:

- Type constraints belong in the type system

- Pattern constraints belong in lint rules

- Behavioral constraints belong in tests

What goes in CLAUDE.md? The things that can't be encoded elsewhere. Architectural intent. Domain knowledge. The "why" behind decisions.

If you find yourself writing "always do X" in your AI instructions, ask: can this be a lint rule? Can this be a type constraint?

If yes, put it there. Deterministic enforcement beats instruction-following every time.

The guarantee question

Come back to the tech lead mental model.

"How do you know this won't break?"

The answer is a stack of guarantees:

"The type system makes invalid states unrepresentable. Lint rules catch every pattern we've identified as problematic. Unit tests verify isolated behavior. Contract tests verify our assumptions about external systems and create fixtures. Integration tests verify components work together. E2E tests verify real user flows in production-like environments."

"And for the things we can't check deterministically - did we run the right tests? does this actually solve the problem? - we have agentic review as a final layer."

This is the answer. Not "I trust the AI" or "I reviewed it myself." A system of layered guarantees where each layer catches what the previous one missed.

The slop is already here

Look around. The internet is filling with AI-generated garbage. Codebases are accumulating technical debt at unprecedented rates. People are shipping faster than ever and understanding less than ever.

This is what happens when you optimize for generation without optimizing for enforcement.

The people who recognize this, who internalize that constraints are the product, will build things that actually work. Everyone else will drown in their own output.

Huntley is right. You either engineer backpressure, or you get slopped.

Some tools mentioned: trigger-cli for self-coded Trigger.dev CLI, wt for git worktree workflow.