The Single Best One-Shot Prompt I've Ever Seen

I recently asked an AI to rebuild a website. One prompt. No back-and-forth. No elaborate system instructions. No twenty-page CLAUDE.md file.

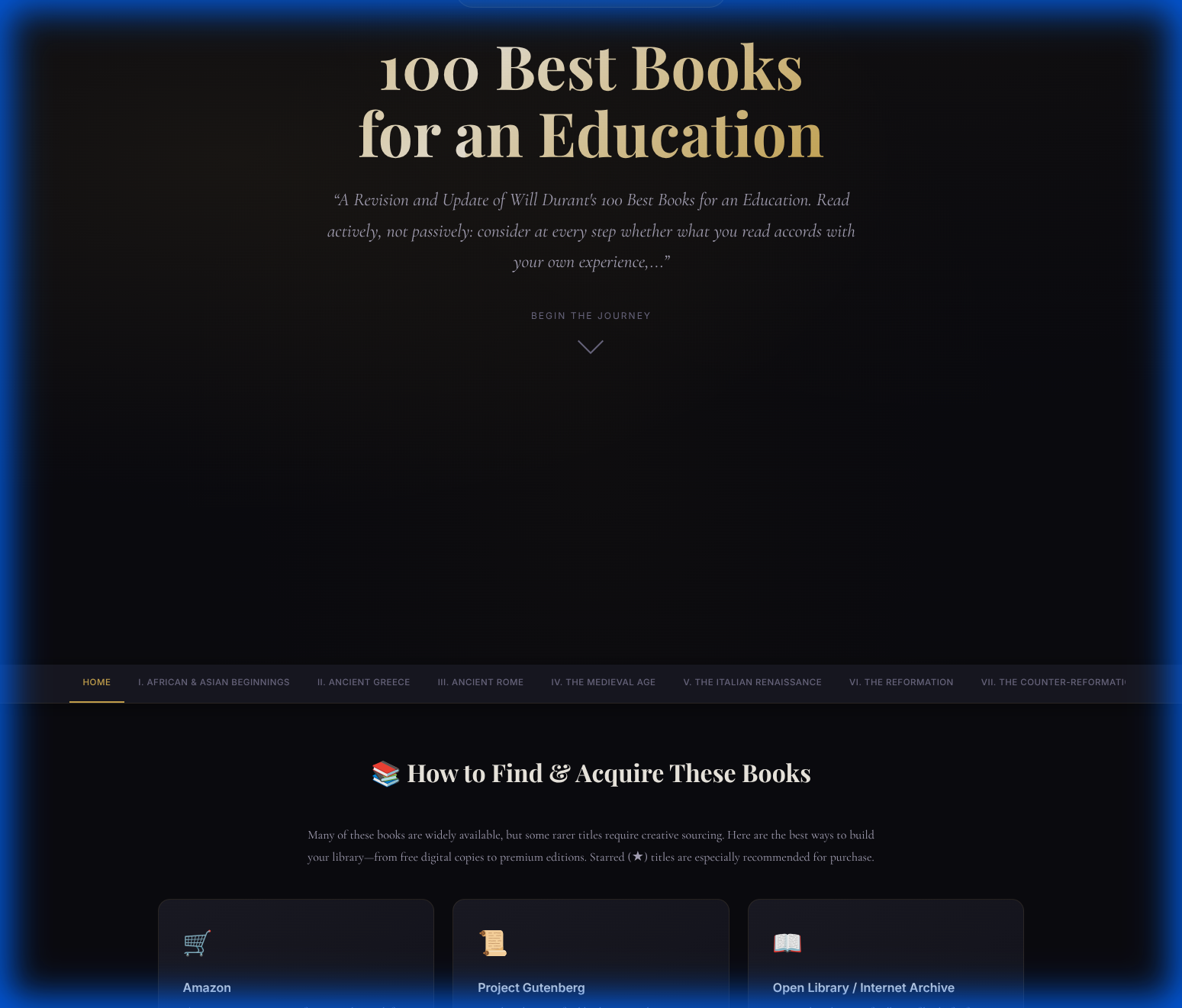

The result blew me away. A plain-text reading list became a dark-themed web application with book covers, sourcing guides, expandable summaries, and a sticky navigation system. In a single shot.

But here's the thing: I didn't write a good prompt. The content itself was the prompt.

The site that prompted itself

The project was 100 Best Books for an Education, a revised version of Will Durant's classic reading list. The original page was simple: walls of text, bullet-point lists, no images. But the writing on that page was anything but simple. It spoke of "Wisdom's Citadel" and "the Country of the Mind." It described a library with "slender casements opening on quiet fields, voluptuous chairs inviting communion and reverie." Confucius and Plato, Caesar and Christ, Leonardo and Montaigne.

When I asked the AI to rebuild this page, the output matched the register of the input. No generic Bootstrap template. No card components. Instead: a dark, scholarly aesthetic with gold accents and Playfair Display serif typography. A shimmering gradient on the title. CSS class names like .hero-badge. The whole thing felt like the content it was serving.

And I didn't ask for any of that.

→ See the live site at onehundredbestbooks.org

Here is the prompt, in its entirety:

Can you completely rebuild this page with images and amazon (links "ill add my

affiliatle link later and ideas on how to get the books when they are not on

amazon there should be images everything summaries take a long long time ill be

going to the gym you have a long time keep going

The vibe theory of prompting

I think this is one of the least discussed insights about working with large language models:

The quality of your input doesn't just affect the accuracy of the output. It affects the entire probability distribution of what gets generated.

Every token the model produces is a choice, influenced by what came before it. When the preceding text is well-crafted, the model's next generations get pulled toward a region of its space where quality lives. Not because it "understands" quality, but because in the training data, good outputs follow good inputs. Beautiful prose begets beautiful design. Careful thinking begets careful implementation.

I keep calling this the "Vibe Theory of Prompting": the quality and intentionality of your input moves the model's whole trajectory. It's not about keywords or instruction-following. It's about where your input sits in the space of all text.

The 100 Best Books content, hand-crafted prose about the greatest works of human civilization, naturally attracted a response from the same neighborhood. The AI reached for design patterns and typography choices that live in the same quality tier. Gold accents instead of Bootstrap blue. Cormorant Garamond instead of Arial. Smooth scroll animations instead of anchor jumps.

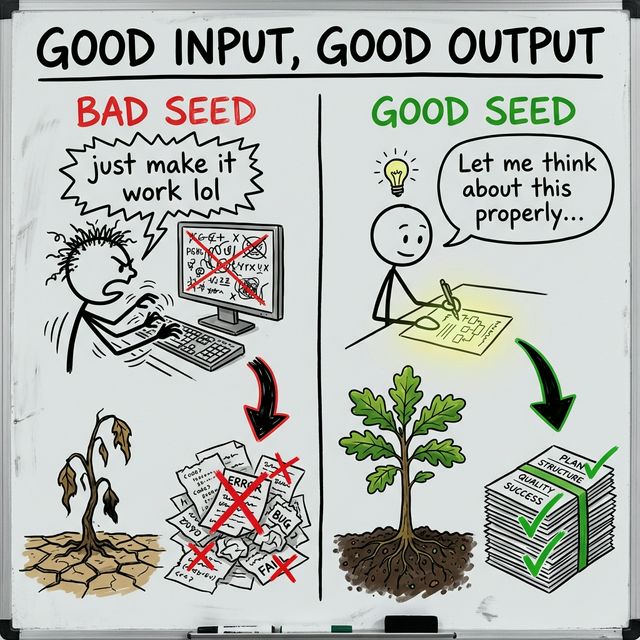

Good input, good output — but not how you think

"Good Input, Good Output" sounds obvious. Of course better prompts get better results. But the conventional wisdom focuses on the wrong kind of good. The prompt engineering community obsesses over precise instructions, role-playing setups ("You are a senior engineer..."), chain-of-thought scaffolding, few-shot examples, temperature tuning.

All useful. All missing the deeper signal: the quality of the substance matters more than the quality of the instructions.

Consider two scenarios:

Say you have a beautifully written spec for a product that solves a real problem, with clear thinking about edge cases and thoughtful language throughout. You paste it into an AI and say "Build this." Zero prompt engineering.

Now compare: a sloppy, half-baked idea full of contradictions. But you wrap it in a perfect prompt with role assignments, output format specs, chain-of-thought instructions, and a detailed CLAUDE.md file.

The first one wins. Every time. Not even close.

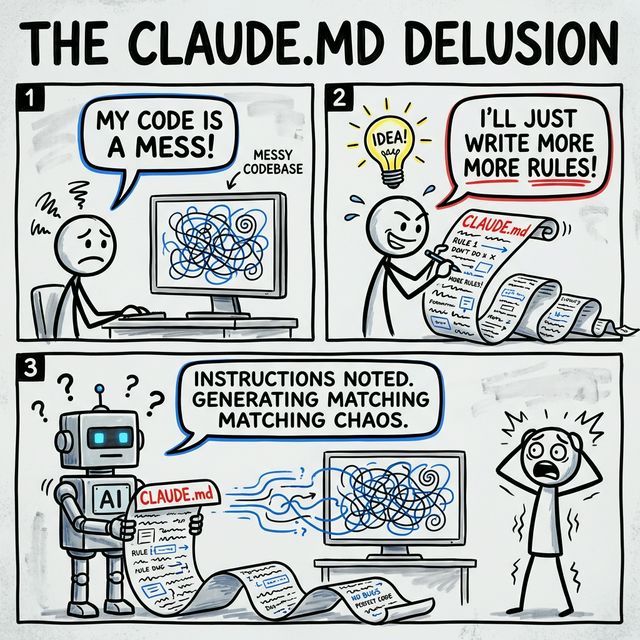

Why a CLAUDE.md can't save a bad codebase

You can't prompt-engineer your way out of a bad foundation.

I see this constantly. Teams with messy codebases, variable names that lie, abstractions that don't abstract, files that do seventeen things, trying to compensate by writing elaborate AI instruction files. "Always use camelCase." "Follow the repository pattern." "Don't touch the legacy module." They're trying to describe, in natural language, the coherence their code lacks structurally.

It doesn't work. The AI is reading your codebase, and your codebase has a vibe. A messy codebase vibrates at a frequency of messiness. The AI will pattern-match to that frequency no matter what your instruction file says. It will produce code that "fits," and fitting into a mess means producing more mess.

The starting conditions determine the trajectory.

This connects to the Dark Software Fabric. Types, lint rules, tests: they don't just catch errors, they set the vibe. A codebase with strict types and comprehensive tests reads as high-quality to an AI. A codebase with any types everywhere and no tests reads as low-quality, and the output matches.

Single words move trajectories

This is the subtle part. Individual words in the early parts of an interaction can shift the entire output. Not instructions. Not paragraphs. Single words.

"Cool" versus "refined." "Make it work" versus "make it elegant." "A website" versus "a digital experience." These aren't just semantic differences. They're routing signals. Each word nudges the probability distribution, and in the chaotic system of autoregressive generation, small nudges compound into wildly different outcomes.

Chaos theory for tokens. Sensitivity to initial conditions.

The 100 Best Books project didn't need the word "elegant" in the prompt because the content itself was dripping with elegance. "Voluptuous chairs inviting communion and reverie" did more prompt engineering than any system message ever could.

The uncomfortable implication

None of this is comfortable for the prompt engineering industry, or for developers who think they can shortcut quality.

The best way to get good output from an AI is to bring good input. Not good prompts. Good substance. The AI amplifies what you give it, along the same axis of quality. Give it mediocrity and it'll produce sophisticated-sounding mediocrity. Give it something you actually care about and the output will surprise you.

The 100 Best Books rebuild surprised me. Not because of a clever prompt, but because the content had been written with actual care over years. That care was the prompt. Everything the AI needed to know about what kind of output to produce was already in the vibe of the input.

The seed is everything

If you take one thing from this: invest in the seed.

Before you write a single prompt, before you touch your CLAUDE.md, ask yourself: is the thing I'm feeding this model actually good? Is it clear? Does it reflect the level of quality I want back?

If not, no amount of prompt engineering will save you. You're trying to grow an oak tree from a weed seed and wondering why your watering instructions aren't helping.

But if the answer is yes, you might not need to prompt-engineer at all. You might just need to say "build this" and watch it happen.

One shot. No instructions. Just good input.